Researchers at GW have invented a flexible and energy-efficient accelerator for graph convolutional neural networks (GCN). First, the novel accelerator design disclosed shows highly enhanced performance in comparison to existing accelerators. For example, the accelerator is capable of simultaneously improving resource utilization and data movement in GCNs, while also being capable of reconfiguring the loop order and loop fusion strategy. Second, this also means that the accelerator design is highly adaptable for various GCN configurations. Third, because of this adaptivity, the amount of DRAM (Dynamic Random-Access Memory) accesses of the accelerator is optimized, which translates to substantial performance improvements and energy savings in practice. Further, cycle-level simulation associated with the novel disclosed invention shows that the accelerator can achieve at least 1.6x times speedup, and at least 2.3x energy savings on average, in comparison to existing designs. In summary, the novel invention disclosed greatly enhances the performance and efficiency of existing GCN models.

The disclosed invention utilizes various optimization models and implements them on the dataflow structures of GCN models and reconfigures the loop order and loop fusion strategy to adapt to different GCN configurations.

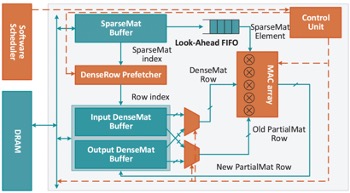

Fig. 1 – The disclosed GCNAX Architecture for Graph Neural Networks

Applications:

- Communication optimization for GCN processing systems (multi-core CPU, GPU, FPGA, ASIC, etc.)

- GCN accelerator design for the key players of high-performance computing market

- Computer Vision

- Recommender Systems

- Natural Language Processing

- Other Prediction Systems

Advantages:

- Significantly Enhanced performance in comparison to existing accelerators

- Substantially Energy-Efficient

- Adaptable and Flexible-Design