Researchers at GW have developed an algorithm-hardware co-design framework for Convolutional Neural Networks (CNN) directed towards mitigating the effects of computational irregularities in existing models. The framework disclosed allows for a reduced model size as to the associated system. For example, the algorithm disclosed utilizes centrosymmetric matrices as convolutional filters thus allows for a much-reduced weights by nearly 50% and enabling a structured computational reuse. Consequently, the new hardware-software co-design framework, significantly increases performance and energy-efficiency for machine learning applications where CNNs are used such as computer vision, image classification, object and speech recognition, recommenders, and many more. Further, cycle-level simulation has shown that the disclosed accelerator allows for an improved energy-delay product in comparison to existing accelerators.

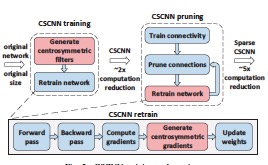

The disclosed algorithm/hardware can be implemented as either a system or a method as can be appreciated. The system or method can include various aspects as follows: (i) CNN compression and acceleration techniques that utilize centrosymmetric matrices; (ii) the said structure allows for a reduced number of weight and computation with negligible accuracy loss while maintaining computational regularity; (iii) disclosed pruning techniques can further reduce computation.

Fig. 1 – One example of disclosed training and pruning aspects

Applications:

- Computer vision applications such as image classification and object recognition

- Speech recognition

- CNN accelerator designs for high-performance computing market

Advantages:

- Reduced model size in comparison to existing accelerators while maintaining computational regularity and accuracy

- Improved energy-delay product in comparison to existing accelerators

- Energy-Efficient

- Enhanced performance

- Very fast